Content Moderation

This guide walks you through integrating TrustPath.io's content moderation capabilities to detect and prevent prohibited, fraudulent, or harmful user-generated content in real-time.

TrustPath's content_moderation event type allows you to evaluate the risk associated with textual content submitted by users. This can be used to screen posts, messages, product listings, or comments before they are published on your platform.

When to Use

Content moderation should be integrated wherever your platform allows user-generated text. This includes, but is not limited to:

- Comments on posts or videos

- Product or service reviews

- Forum threads and replies

- Chat messages and private DMs

- User-submitted advertisements or listings

- Profile descriptions or usernames

Why Content Moderation Matters

Unchecked content can have serious consequences for platforms, users, and brand reputation. Some of the key risks of not performing proper moderation include:

- User trust and safety: Exposure to scams, hate speech, or explicit material can harm users and drive away your community.

- Brand reputation: Platforms that fail to moderate harmful or misleading content may lose the trust of advertisers, users, and investors.

- Search engine and app store penalties: Major platforms like Google and Apple penalize apps and sites that allow prohibited content.

- Increased fraud: Malicious actors may use unmoderated platforms to spread misinformation, phishing links, or fraudulent promotions.

- Legal liability: Hosting or distributing content that promotes illegal activity (e.g., the sale of prescription drugs without a license) may result in regulatory fines or platform takedowns.

Benefits of Using TrustPath for Moderation

TrustPath's content_moderation evaluation helps you detect and block:

- Scam and phishing messages

- Hate speech, discrimination, and offensive language

- Negative sentiment or psychological manipulation

- Prohibited content such as drugs, weapons, or adult material

- Non-compliant content in regulated industries

By integrating content moderation into your product flow, you can:

- Automate protection without hiring large moderation teams

- Adapt dynamically to evolving threat patterns and content tactics

- Maintain a safe, inclusive, and compliant environment for all users

Prerequisites

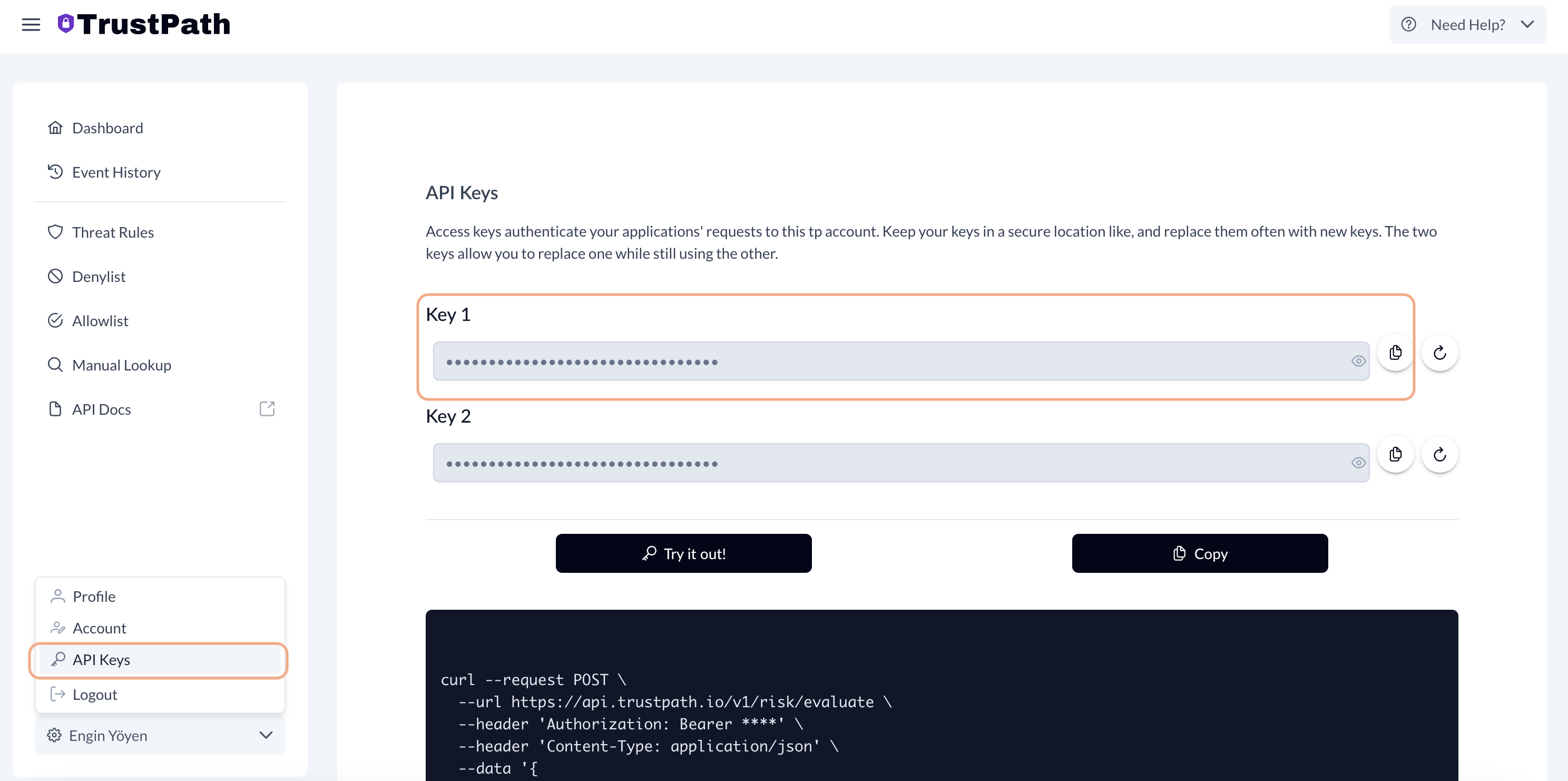

The first step is to create an account on TrustPath.io and obtain your API key.

- Go to https://trustpath.io and click on Sign In.

- Once registered, navigate to the API Key section from the lower-left menu.

- Copy your API key for use in your application.

Alternatively, you can copy the preconfigured curl request from the same page to test the system immediately.

TrustPath.io Threat Detection Rules

TrustPath.io threat detection rules are individual risk assessment criteria designed to identify and flag potentially fraudulent activity. Each rule evaluates specific signals—such as behavior patterns or metadata—to detect suspicious events and assigns a corresponding risk score.

The total risk score for an event ranges from 0 to 100, where:

- 0 indicates no risk (safe to approve)

- 100 indicates high risk (likely fraudulent, recommended to decline)

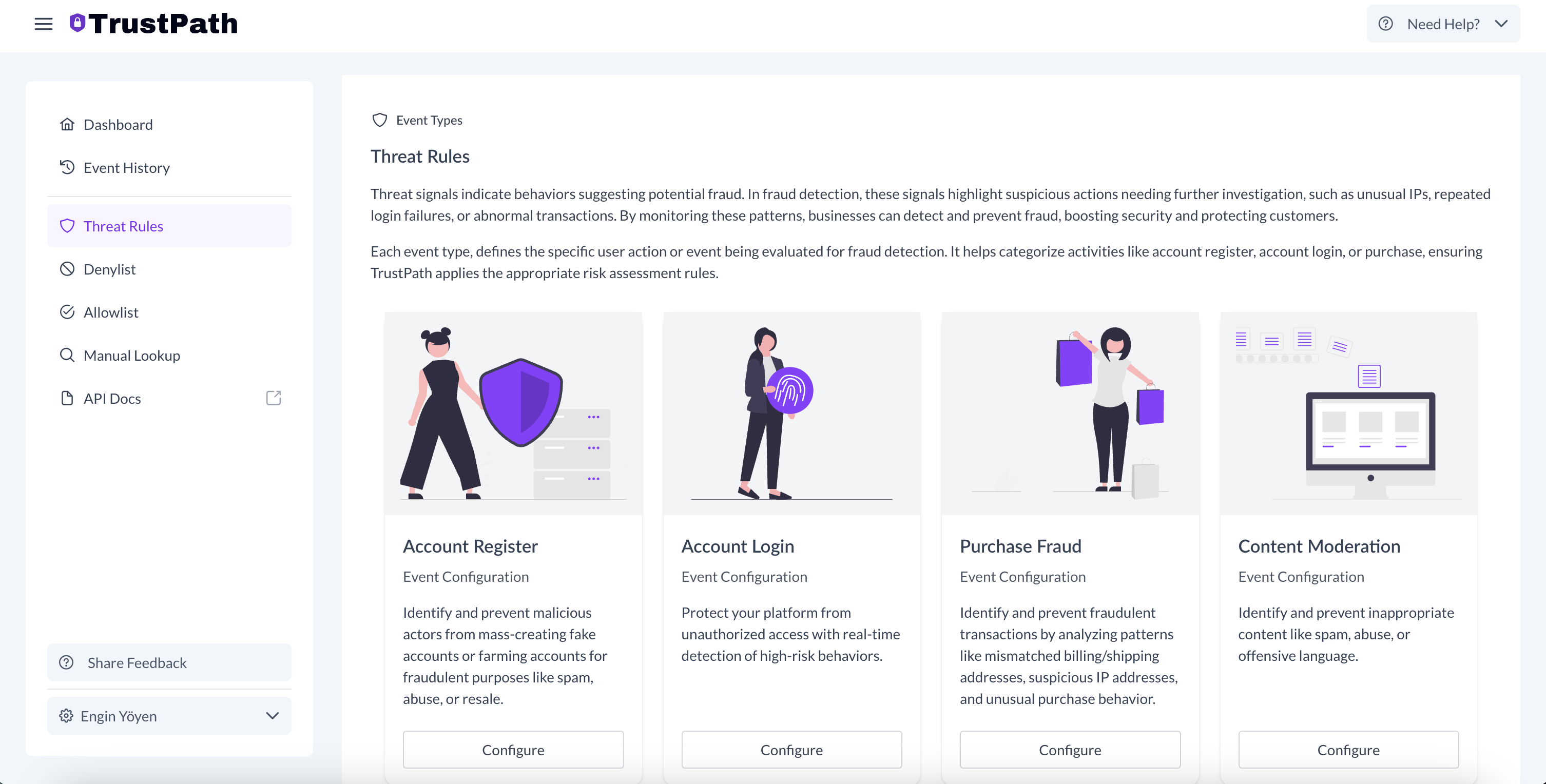

Configuring content_moderation Event Detection

To set up detection for account registration flows:

- Go to the ThreatRules section in the dashboard.

- Select the Content Moderation card.

- Use TrustPath.io's default rules or define custom rules to fit your needs.

This lets you tailor your risk scoring and automate responses based on event threat levels.

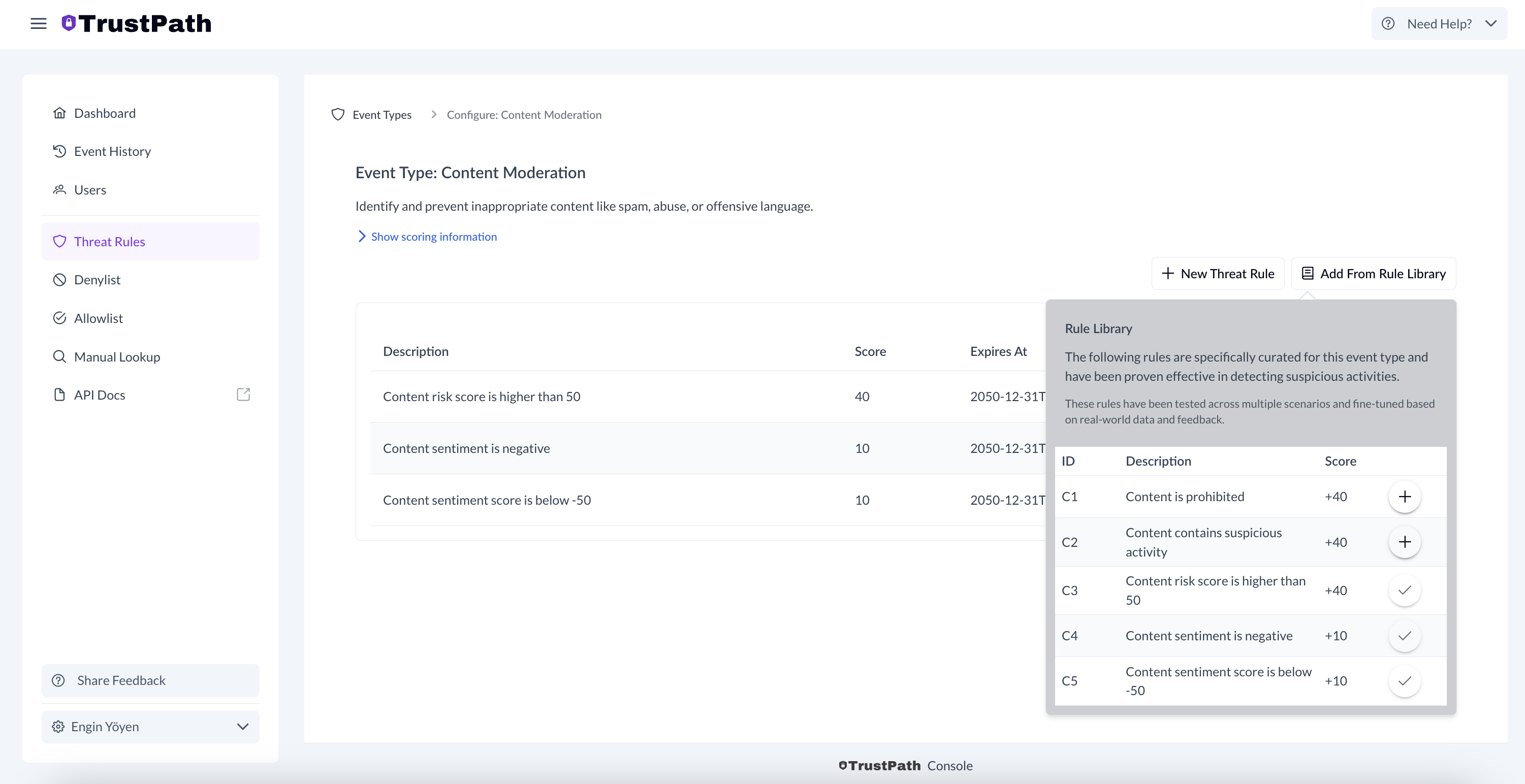

Adding Rules to the content_moderation Event

To improve fraud detection:

-

In ThreatRules, open the Content Moderation event.

-

Click Add Rule From Library (top-right).

-

Select relevant rules from the available list.

- Content is prohibited

- Content contains suspicious activitye

- Content sentiment is negative

- Content risk score is higherP

Once added, these rules will automatically trigger content check every every time you make the API call with textual content, improving your ability to catch malicious content in real time.

For textual content, the TrustPath.io API returns not only a numeric score but also a textual explanation of its reasoning. An example of this is provided in the next section.

Integrating TrustPath.io with Your System

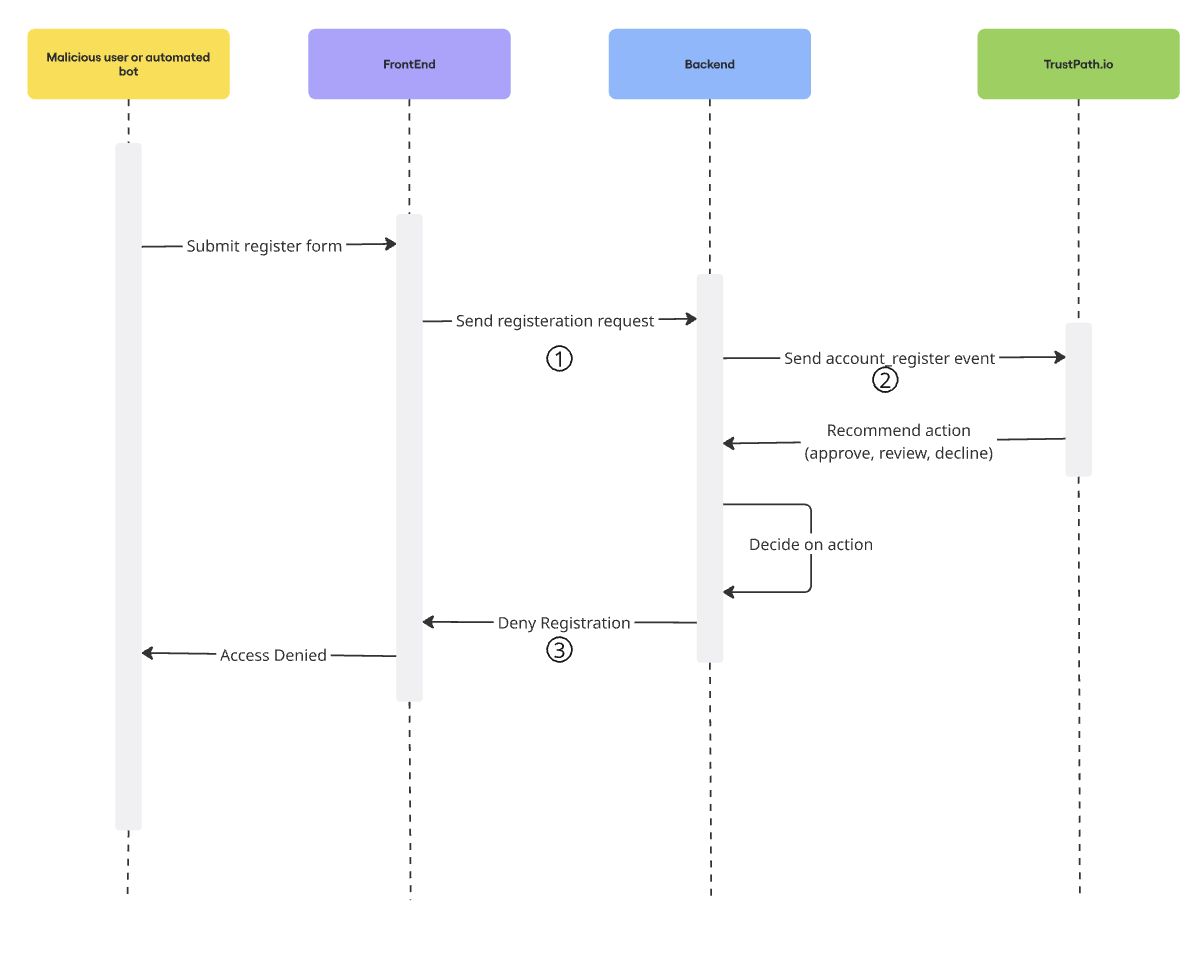

Now that everything is set up in TrustPath.io, the next step is to integrate it with your backend system.

The following flow diagram illustrates how this works:

When a malicious user or automated bot submits a registration form, the request first reaches your backend. Rather than immediately creating a new account, your backend sends a request to the TrustPath.io API, including the user's IP address and email.

TrustPath.io then enriches the incoming data and evaluates it against the threat detection rules you configured earlier. The response includes:

- A detailed breakdown of detected risks or issues

- A risk score between 0 and 100, which maps to a recommended action:

- 0–20: Approve

- 20–40: Review

- 40–100: Decline

Based on this response, your backend can then make an informed decision—whether to approve the registration, flag it for manual review, or block it entirely.

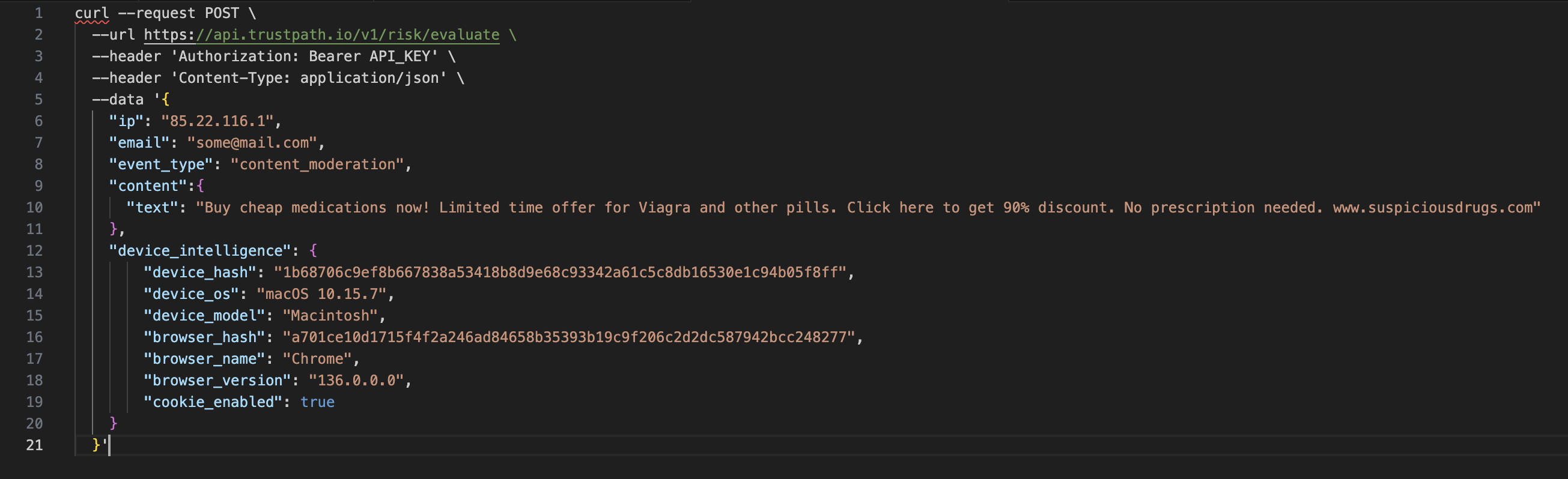

What the Request Looks Like

The following curl request highlights how straightforward the integration is.

To perform a threat assessment, simply include these three fields:

content.text- the content under review, it could be, comment, post, listing, etc.email– the user's email addressip– the user's IP addressevent_type– the type of event (e.g.,content_moderation)

As shown in the curl command above, along with the email content, additional data such as IP address and device intelligence is also sent. These extra details help flag suspicious requests. For example, if the IP address is identified as originating from a data center, it's a strong indicator that the request may be automated and likely coming from a bot.

Understanding the Response

The response from Trustpath.io provides a comprehensive set of insights based on the data you submit—most notably the content, but also the associated email, IP address, and device intelligence when available.

While all these inputs contribute to the overall risk analysis, this section focuses specifically on the content portion of the response, which is most relevant for content moderation use cases.

Key Content Response Fields

Here are the primary fields to pay attention to when evaluating submitted text:

| Field | Description |

|---|---|

prohibited_content | Indicates whether the content includes banned or illegal material (e.g., drugs, hate speech, adult content). |

suspicious_activity_detected | Flags potential fraud signals, scams, or deceptive offers within the content. |

sentiment_rating | General tone of the content (positive, neutral, negative). |

sentiment_score | Numerical representation of sentiment intensity, typically ranging from -100 to +100. |

Contextual Analysis

In addition to the high-level flags, TrustPath.io provides detailed explanations for each risk indicator through:

-

Compliance Details Explains legal or regulatory violations (e.g., promotion of prescription drugs without a license).

-

Fraud Indicators Highlights potential scam patterns (e.g., "too good to be true" offers or misleading urgency).

-

Sentiment Insights Provides context on negative or manipulative language that may harm platform integrity.

These details are useful for:

- Blocking or flagging harmful content before publication

- Providing users with clear reasons when rejecting submissions

- Auditing moderation decisions for transparency and compliance

- Customizing workflows based on severity or violation type

By leveraging both the binary threat signals and the narrative insights, you can build a robust and explainable content moderation system tailored to your platform's policies and risk tolerance.

You can see the API response below. Note that some fields have been omitted for brevity.

{

"success": true,

"data": {

"event_id": "7e824715-6b53-4246-b7f8-3fe978004491",

"score": {

"fraud_score": 100.0,

"state": "decline",

"details": [

{

"rule_name": "Content is prohibited",

"score": 40

},

{

"rule_name": "Content contains suspicious activity",

"score": 40

},

{

"rule_name": "Content risk score is higher than 50",

"score": 40

},

{

"rule_name": "Content sentiment is negative",

"score": 10

},

{

"rule_name": "Content sentiment score is below -50 ",

"score": 10

}

]

},

"content": {

"detected_language": "en",

"threat_signal": {

"prohibited_content": true,

"suspicious_activity_detected": true,

"content_risk_score": 85,

"sentiment_rating": "negative",

"sentiment_score": -70

},

"details": {

"compliance": [

{

"indication_type": "Illegal sale of prescription medication",

"severity": "high",

"details": "The advertisement promotes the sale of medications without a prescription, which is illegal in many jurisdictions."

}

],

"fraud": [

{

"indication_type": "Potential scam",

"severity": "high",

"details": "The offer of a 90% discount on medications is suspicious and may indicate a fraudulent scheme."

}

],

"sentiment": [

{

"indication_type": "Negative sentiment towards the offer",

"severity": "high",

"details": "The language used suggests urgency and discounts that are typically associated with scams."

}

]

}

}

}

}

With this integration, you're not just preventing obvious spam or low-effort abuse—you're building a scalable system to handle complex content moderation challenges. TrustPath.io empowers you to automatically detect and block high-risk content such as scam promotions, illegal product listings, hate speech, or psychologically manipulative messages. Beyond basic keyword filtering, the system uses risk scoring, sentiment analysis, and content classification to evaluate context and intent. This allows your platform to proactively manage compliance risks, protect your community, and maintain brand integrity—all while reducing the burden on manual review teams.